DevSecOps – a practitioner's perspective

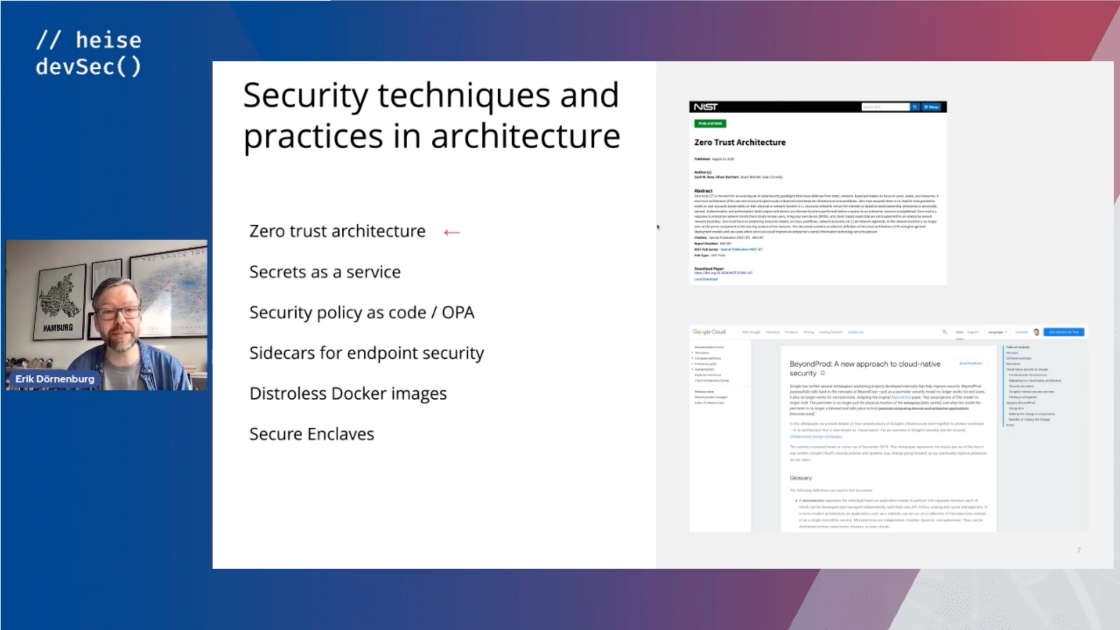

Closer collaboration between developers and operations people brought businesses many benefits. It is also fair to say, though, that it created new headaches. Some practices, especially continuous deployments, forced us to rethink the traditional security sandwich, with conceptual work up-front and a pen test at the end. It was easy to sneak a “Sec” into DevOps, it was reasonably obvious to call for security to be “shifted left”, but in practice this raised even more questions.

Based on his experience working as a consultant Erik will address these questions. He will discuss practices like container security scanning, binary attestation, and chaos engineering, alongside examples of concrete tooling to support these practices. In addition Erik will show how the concept of fitness functions, which have become popular in evolutionary approaches architecture, can be applied in the security domain.

Patterns for Micro Frontends

Architectures based on microservices have spread rapidly in the past few years. Organisations are drawn to the promise of independent evolvability, which allows to reduce cycle time and scale development. At the same time in many software solutions the majority of the codebase is now running in the web browser, which leads to an often underestimated challenge: the software design of the frontends. All too often teams have well-structured services running on the servers but a big, entangled monolith in the browser.

In this talk Erik describes a number patterns, harvested from practical use, that allow teams to avoid the dreaded frontend monolith, and build software solutions that fully deliver on the promise of microservices. The patterns range from the simple, using edge-side includes to do dynamic, yet cacheable, server-side composition to the complex, including an example of how to compose a React application inside the web-browser.

All Roads Lead to DevOps

Today it is hard to imagine that fifteen years ago agile development was a niche approach, considered too radical to be used in the mainstream. Similarly, when the DevOps movement started about five years ago only a small number of innovative organisations took note. They quickly gained competetive advantages, which then led to more and more interest in the movement.

Erik will talk about experiences organisations have made with DevOps. He will discuss processes, tools, and organisational structures that led to the successful merging of development and operations capabilities, and he will describe how DevOps fits with other trends such as Microservices and Public Clouds. All of this forms a picture that allows only one conclusion: sooner or later all successful organisations will move to a DevOps model.

Our Responsibility to Defeat Mass Surveillance

Our talk will begin with one of the core themes of the early development of agile software - that those involved in software development should take a more collaborative role, not just building software but helping to determine how software can help its users. We believe that this engagement requires greater knowledge of a user's goals and also responsibility for the user's welfare and our impact on the world. While the internet has brought great benefits in communication, it's also led to an unprecedented opportunity for mass surveillance, both by states and private corporations.

We'll discuss how defeating such surveillance requires greater security in our communication, reversing recent centralization, and attention to user experience. For the last year Erik has been leading a team to apply these principles to email. We will explain why the argument of "I have nothing to hide" is flawed and why it is our our responsibility to take up this task.

AutoScout24: How the cloud makes us more agile

Agile development practices were well established at AutoScout24 when we embarked on a project to move from hosting in data centres to the AWS cloud. The move allowed us to build on our experience with agile development and become even more agile through some of the opportunities offered by a public cloud solution.

In this talk Philipp and Erik report on their first-hand experience on Tatsu, the project that transforms the existing, mature, IT setup into a next generation web-scale IT platform. They describe how the team benefited from elasticity beyond production by introducing elastic computing to development and data analysis tasks. They discuss how a cloud environment greatly helped with the restructuring towards “two-pizza” teams that work with a “you build it, you run it” mindset. Additionally, Philipp and Erik explain how architecture decisions that have an impact on infrastructure can be made more freely in a cloud environment, resulting in solutions that are a better fit for the problem.

Questions for an Enterprise Architect

Following the success of agile and lean principles for individual projects we are now seeing interest in applying the same principles across the entire enterprise. This brings agile and lean thinking to architecture groups, and raises questions around enterprise architecture and governance.

In this session Erik introduces the concept of evolutionary architecture and then discusses questions such as: How can an architecture strategy be executed in a lean context? What about conformance? And: where do the architects sit in a lean enterprise?

Public presentations

- Java Forum Stuttgart 2012 - Stuttgart, Germany

- GOTO Conference 2011 - Århus, Denmark - VideoSlides

- Keynote, JAX 2011 - Mainz, Germany - Video (German)

- Agile, Lean, and Kanban eXchange 2010 - London UK

Software quality - you know it when you see it

Software quality has an obvious external aspect, the software should be of value to its users, but there is also a more elusive internal aspect to quality, to do with the clarity of the design, the ease with which we as technologists can understand, extend, and maintain the software. When pressed for a definition, this is where we usually end up saying "I know it when I see it." But how can we see quality? This session explains how visualisation concepts can be applied at the right level to present meaningful information about quality. Different visualizations allow us to spot patterns, trends, and outliers. In short, they allow us to see the quality of our software. The tools and techniques shown are easy to apply on software projects and will guide the development team towards producing higher quality software.

Public presentations

- 33rd Degree 2013 - Warsaw, Poland

- Developer Conference Hamburg 2012 - Hamburg, Germany

- GOTO Geek Night 2012 - Hamburg, Germany

- GOTO Conference Copenhagen 2012 - Copenhagen, Denmark - tutorial

- QCon San Francisco 2011 - San Francisco, USA - Video

- GOTO Conference 2011 - Århus, Denmark - tutorial

- SEACON 2011 - Hamburg, Germany - tutorial

- QCon London 2011 - London, UK - Video

- Agile, Lean, and Kanban eXchange 2010 - London UK

- JAX London 2010 - London, UK

- Australian Computer Society (Nov 2009) - Sydney, Australia - Slides

- JAX Asia 2008 - Singapore / Kuala Lumpur, Malaysia / Jakarta, Indonesia

- JAOO Australia 2008 - Brisbane and Sydney, Australia

Software Visualization and Model Generation

Models are often viewed as something you create during design time and use to generate code. What if we turn the approach up-side-down and generate models from code? Humans are very good at recognizing patterns in images, making visualizations a valuable tool, for example to recognize dependencies or data flow. This is particularly true for dynamic, loosely coupled systems that are often less explicit and evolve over time. Once you have generated a model you can take things a step further and run checks and validations against it. Visualizations can also be used to plot out source code metrics over various dimensions to detect potential "hot spots" in the application that may require special attention.

This talk applies the concepts of visualization and model generation to a broad range of usage scenarios, such as asynchronous messaging, software components and object-oriented applications.

Public presentations

- JAOO Australia 2009 - Sydney and Brisbane, Australia

- JAOO Australia 2008 - Brisbane and Sydney, Australia - with Gregor Hohpe

- TSS Java Symposium Europe 2007 - Barcelona, Spain - with Gregor Hohpe

- ExpertZone Developer Summit 2007 - Stockholm, Sweden

- OOP 2007 - Munich, Germany - with Gregor Hohpe

- JAOO 2006 - Århus, Denmark

- JavaZone 2006 - Oslo, Norway - with Gregor Hohpe

- TheServerSide Java Symposium Europe 2006 - Barcelona, Spain - with Gregor Hohpe

- TheServerSide Java Symposium 2006 - Las Vegas, USA - with Gregor Hohpe

Archived talks

Even more talks are listed on this page.